Retrieval Augmented Generation: How RAG helps AI in 2025

Retrieval Augmented Generation: The Smarter Way to Build Chatbots in 2025

AI chatbots are evolving fast; and 2025 marks the year they stop guessing and start knowing.

At the center of this shift is Retrieval Augmented Generation (RAG), a breakthrough that’s redefining how chatbots think, search, and respond.

If traditional AI chatbots were like memory-driven assistants, RAG chatbots are like assistants with a built-in research engine. They don’t just rely on what they’ve been trained on; they retrieve real information before answering. That single difference changes everything for accuracy, trust, and usability.

What Is Retrieval Augmented Generation?

Retrieval Augmented Generation, or RAG, is an advanced architecture that combines two powerful AI capabilities:

- Information retrieval – fetching relevant, real-world data from connected sources like documents, databases, or websites.

- Natural language generation – using a large language model (LLM) to craft coherent, context-rich responses.

Here’s how it works: when a user asks a question, the chatbot first searches for relevant information from its knowledge base (using vector databases or embeddings). Then, it passes that information to an LLM, which generates a detailed, contextually accurate answer.

This two-step process ensures your chatbot’s responses are grounded in real data, not just generic AI predictions.

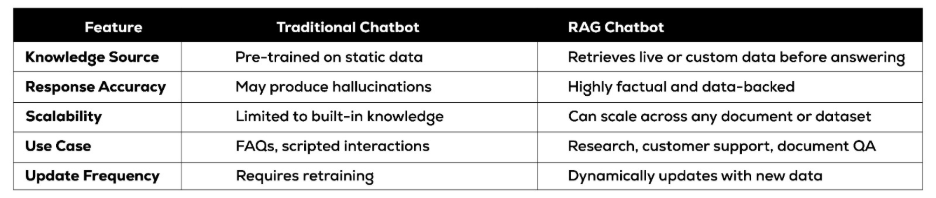

RAG Chatbots vs. Traditional Chatbots

The difference between a traditional chatbot and a RAG chatbot is the difference between memory and research;

RAG chatbots are ideal for industries like healthcare, finance, legal, and education; where accuracy and context are critical. They bring the best of both worlds: the creativity of LLMs and the reliability of structured knowledge.

Why RAG Matters Now More Than Ever

The biggest challenge with generative AI is accuracy. Even the best models like GPT-4 or Claude 3 can produce confident but incorrect responses.

That’s where Retrieval Augmented Generation comes in; it grounds every answer in your verified data, making AI more accountable and reliable.

A few reasons why RAG is becoming the new standard in chatbot creation:

- Keeps data private and updated: You can feed your chatbot company data or documents without retraining the model.

- Improves trust: Each response can cite its source, improving user confidence.

- Enables personalization: The retrieval layer allows chatbots to pull context-specific insights for each user.

- Reduces cost: No need for frequent fine-tuning or expensive retraining runs.

In short, RAG helps businesses build smarter, faster, and more trustworthy chatbots; something every organization will need as AI becomes customer-facing.

How the RAG Model Works: Step by Step

Let’s break down how a RAG model operates behind the scenes.

- Document Ingestion

The chatbot’s system ingests data from files, APIs, CRMs, or websites. These could be PDFs, FAQs, customer histories, or research archives.

- Text Chunking

Large documents are split into smaller, digestible sections so the system can retrieve only the relevant pieces when needed.

- Embeddings Generation

Each text chunk is converted into a vector (a numerical representation of meaning) using an embedding model like OpenAI or Hugging Face.

- Vector Storage

These vectors are stored in a vector database (like Pinecone, Weaviate, or Chroma).

- Retrieval and Querying

When a user asks a question, the chatbot searches for the most relevant chunks using similarity search.

- Answer Generation

The retrieved information is then passed into the LLM, which creates a cohesive, human-like response based on the real data it found.

- Response Delivery

The chatbot delivers the final answer, often with references or links to the original data.

This combination of retrieval and generation makes RAG-powered chatbots not only smarter but also self-correcting and explainable.

Real-World Use Cases of RAG Chatbots

1. Customer Support Systems

Instead of generic replies, a RAG chatbot can access policy documents, manuals, or past interactions to deliver precise answers in seconds.

2. Healthcare Assistants

Medical chatbots can retrieve validated research papers or clinical guidelines before providing responses, reducing misinformation.

3. Legal and Compliance Helpdesks

RAG systems can interpret legal documents, contracts, and regulations while maintaining compliance.

4. HR and Employee Knowledge Bases

Instead of static wikis, employees can chat with an AI assistant that pulls accurate answers directly from company handbooks or training materials.

5. Ecommerce Product Guidance

A RAG chatbot can reference catalog data and reviews to recommend the right products with real details, not assumptions.

Why Businesses Are Switching to RAG

Businesses that integrate Retrieval Augmented Generation into their chatbot systems gain significant advantages:

- Reduced Hallucinations: Less guesswork, more grounded answers.

- Faster Onboarding: No coding needed to add new data.

- Consistent Brand Voice: Even when answers come from multiple data sources.

- Better Search Experience: Customers no longer dig through FAQs; they just ask and get contextual responses.

And the best part; these chatbots evolve naturally as your knowledge base grows.

Botxpert: Making RAG Accessible to Every Business

Building a RAG chatbot sounds technical; but it doesn’t have to be.

At Botxpert, we’re bringing Retrieval Augmented Generation to businesses without engineering headaches.

Our no-code platform lets you upload your data, connect external sources, and create intelligent chatbots that understand context, retrieve real-time answers, and interact like human agents.

With botxpert, businesses can:

- Build a Retrieval Augmented Generation chatbot in minutes.

- Integrate with CRMs, websites, or internal tools.

- Automated support, lead qualification, and training using AI grounded in your own data.

Whether you’re a startup looking to scale customer support or an enterprise automating internal workflows, botxpert gives you the power of RAG without the complexity of coding.

The Future of Chatbot Creation Is Retrieval-Augmented

The RAG model is reshaping what it means to build AI assistants. It’s the evolution of chatbots; from conversational to truly contextual.

Instead of making things up, your AI can now pull facts from your data and respond intelligently.

For businesses, this means better customer engagement, higher trust, and more accurate automation; powered by technology that’s smart enough to learn, reference, and reason.

And with tools like botxpert, you don’t need a team of data scientists to get there. You just need your data, your goals, and a few clicks to bring your chatbot to life.

The next wave of AI isn’t about what models know; it’s about what they can find.

Start for free. Launch in minutes. Let your website talk.

Try botxpert today and build your ideal website chatbot.

check out our other blogs to know more. blogs.botxpert

Really appreciate how this post highlights the difference between traditional AI chatbots and RAG-based systems. The idea that chatbots can now ‘retrieve before they respond’ feels like a huge leap forward for both accuracy and user trust. It’ll be interesting to see how businesses adapt their data infrastructure to fully leverage this kind of contextual intelligence in 2025 and beyond.

RAG’s two-step process seems like a game-changer for AI chatbots. By pulling in up-to-date information, it ensures responses are much more accurate and trustworthy compared to traditional models that simply predict answers.

RAG seems like a critical innovation in AI chatbot development. One thing I’m curious about is how it handles conflicting information from different data sources. Does it have a built-in system to prioritize the most reliable sources?